Latent Timbre Synthesis

Fast Deep Learning tools for experimental electronic music

by Kıvanç Tatar, Daniel Bisig, and Philippe Pasquier

Latent Timbre Synthesis is a new audio synthesis method using deep learning. The synthesis method allows composers and sound designers to interpolate and extrapolate between the timbre of multiple sounds using the latent space of audio frames. The implementation includes a fully working application with a graphical user interface, called interpolate_two, which enables practitioners to generate timbres between two audio excerpts of their selection using interpolation and extrapolation in the latent space of audio frames. Our implementation is open source, and we aim to improve the accessibility of this technology by providing a guide for users with any technical background. Our study includes a qualitative analysis where nine composers evaluated the Latent Timbre Synthesis and the interpolate_two application within their practices.

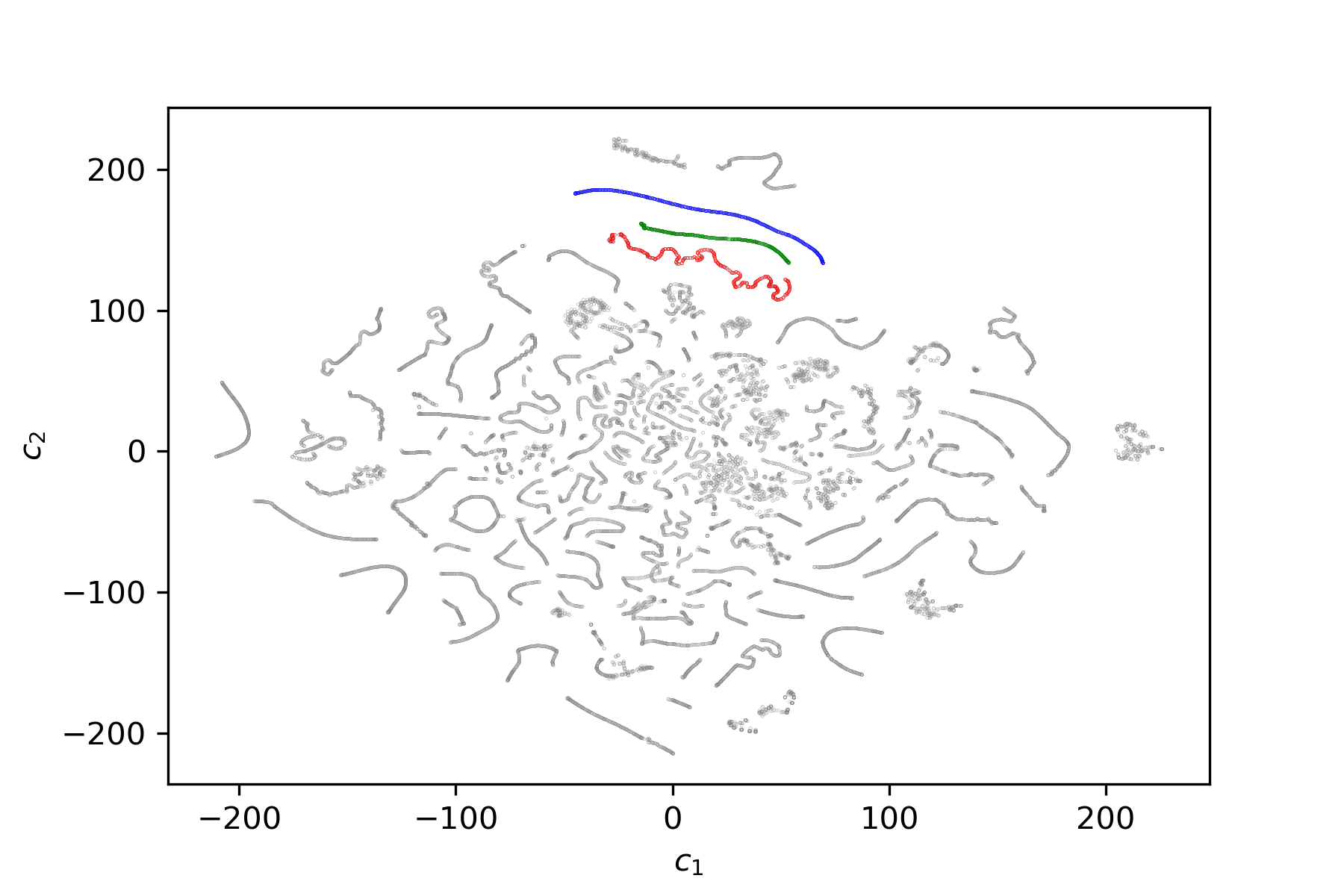

The visualizations below illustrate a latent space generated by a Variational Autoencoder. On the right, the green and red dots represent latent vectors of audio frames of two audio examples from the dataset, while the green is the latent vectors of a synthesized sound generated by interpolating the latent vectors of two original audio files.

Publications

->Tatar, K., Bisig, D., & Pasquier, P. Latent Timbre Synthesis: Audio-based Variational Auto-Encoders for Music Composition Applications. The Special Issue of Neural Computing and Applications: “Networks in Art, Sound and Design.”https://doi.org/10.1007/s00521-020-05424-2

->Tatar, K., Bisig, D., & Pasquier, P. (2020). Introducing Latent Timbre Synthesis. https://arxiv.org/abs/2006.00408

Compilation Album

💿️

Nine composers joined our qualitative study and composed short pieces using Latent Timbre Synthesis.Source code

︎

Examples

The naming convention of example audio files are as follows. Original 1 and Original 2 are the excerpts of original samples. 00-original-icqt+gL_1 and 00-original-icqt+gL_2 tracks are generated using the original magnitude spectrums, and phase is added after using a reconstruction technique. Likewise, our Deep Learning model generates only the magnitude spectrum, and phase is added later using a reconstruction technique. Hence, the original-icqt+gL_1 and original-icqt+gL_2 are the ideal reconstructions that the Deep Learning model aims to achieve during the training.

Reconstructions-> 00-x_interpolations 0.0 and 00-x_interpolations 1.0 are reconstructions of the original audio files using the Deep Learning model, original 1 and 2 respectively. Ideally, these reconstructions should be as close as possible to the original magnitude responses combined with phase estimations; which is the original-icqt+gL_1 and original-icqt+gL_2 files, respectively.

Timbre Interpolations-> 00-x_interpolations 0.1 means that this sample is generated using 90% of the timbre of original_1 and 10% of the timbre of original_2. Try to think 0.1 almost like a slider value from audio_1 to audio_2.

Timbre Extrapolations-> x_interpolations-1.1 means that we are drawing an abstract line between timbre example 1 and 2, and then following the direction of that line, we are moving further away from the timbre 2 by 10%. X Extrapolations -0.1 means we are drawing a line between timbre 2 and 1, and moving further away from timbre 1 in that direction by 10%.

An example video of interpolate_two app is on the way! We are also finalizing a set of visualizations as well as a qualitative study. I will keep this page updated as we progress.

Acknowledgements

This work has been supported by the Swiss National Science Foundation, the Natural Sciences and Engineering Research Council of Canada, and Social Sciences and Humanities Research Council of Canada.

Ce travail est supporté par le Fonds national Suisse de la recherche scientifique, le Conseil national des sciences et de l’ingénieurie du Canada, et le Conseil national des sciences humaines et sociales du Canada.